Current Projects

Iris: Designing Real Time Gaze Visualizations

Researchers: Jeff Brewer and Sarah D'Angelo

Eye movements contain a wealth of information about how we process visual information. The Iris platform allows users to design gaze visualizations to represent their eye movements in real time. isualizations can be customized on a number of different features including: size, color, number of previous fixations, smoothing, opacity, and style.

Collaboration Dynamics Across Language Communities

The past two decades have seen an explosion of impactful social computing projects, such as Wikipedia, Zooniverse, and Linux. These projects are developed by increasingly diverse communities of contributors, who develop varying collaborative processes. However, while tasks increasingly require collaboration across linguistic and cultural boundaries, existing research still examines collaborative processes largely within English-speaking communities. This project aims to (1) understand differences in collaboration dynamics across social computing platforms that span linguistic and cultural boundaries, (2) investigate how these differences may bias content production, (3) explore how multilingual users currently span boundaries to mitigate content asymmetries, and (4) design tools that intelligently facilitate collaboration across existing linguistic and cultural boundaries.

Stereotype Threat in Collaborative Contexts

Stereotype threat describes the phenomenon of being at risk of confirming negative stereotypes associated with one's social identity group. Our research builds off of existing work to study racial minorities in digital working environments. We aim to investigate how minorities are impacted by their digital environments while completing collaborative tasks, as well as how these environments influence the ways in which minorities interact and perform.

Older Adult Blog Analysis

Researchers: Mark Diaz, Anne Marie Piper

Counter to popular stereotypes, a significant community of older adults are active Internet users and bloggers. In this project we are qualitatively and quantitatively analyzing linguistic and rhetorical devices used by one prominent blog author to understand how they articulate anti-ageist ideologies.

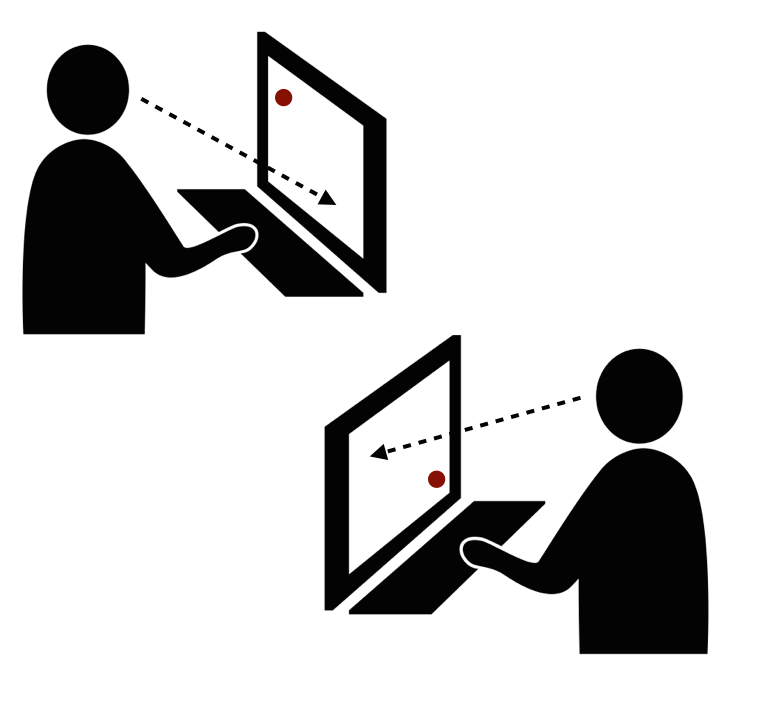

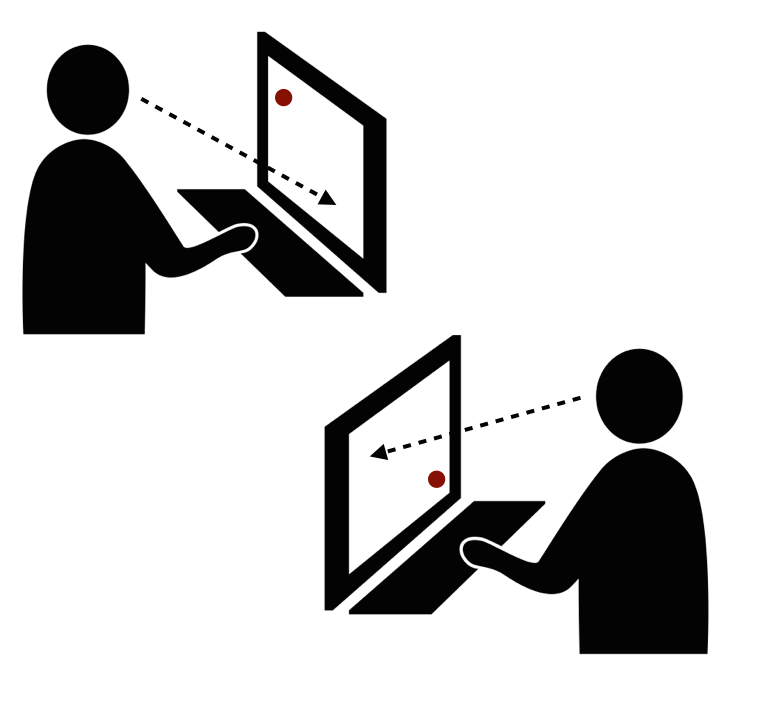

Shared Gaze Awareness for Remote Collaboration

Distributed environments lack many of the rich interpersonal cues that are leveraged in effective co-located collaborative work. One way to integrate non-verbal cues into distributed settings to support collaboration is through shared gaze awareness. This technique involves collecting eye movement data from two collaborators and displaying that information on each individual’s screen. We are developing novel representations of gaze awareness to improve collaboration in remote work.

Perceptually Aware Visualizations of Non-Visual Artifacts

Researchers: Noah Liebman, Bryan Pardo

Computer-based user interfaces are predominantly visual, so most computer-based work requires visual representations of whatever is being acted on. But people also use computers to interact with data representing non-visual phenomena like sound. To be useful, visual representations of non-visual artifacts should correspond to how people perceive the original artifacts. We are developing visualizations of multitrack audio based on computational models of human auditory perception. Using these models, we believe our visualizations will present audio in a more useful way to audio engineers.

Past Projects

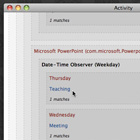

Rapid Development Platform for Human Evaluation of Eye Tracking Algorithms and Visualizations

For an eye tracking system to work effectively for the user, algorithms are used for two purposes 1. Transform raw data from the tracker into a form appropriate for the user experience (e.g. screen coordinates or a true/false statement about whether gaze is in a particular area) 2. Visualize data from the eye tracker such that the user understands how their gaze affects the system. Designing the tracking and visualization algorithms often require making decisions that can only be evaluated in tandem and by a human. Our platform uses the python web framework, Flask, to enable developers to quickly iterate and test these algorithms through a locally hosted web interface.

Tactile Communication in Collaboration

Researchers: Noah Liebman, Jeremy Birnholtz

In distributed work and social scenarios, it is often difficult to coordinate remotely with others to complete tasks or to interact informally. We are experimenting with the use of haptic feedback to support coordination and improve awareness by haptically displaying information about a collaborator’s availability. To approach this, we built a system for conveying availability information through texture on a variable friction tablet.

Talk About Things with Kids: Understanding World-Situated Language

Speakers use a broad range of verbal and non-verbal tools to communicate spatial information with collaborators during a shared task. In this project we explore the ways that speakers adapt their language use and non-verbal communication to accommodate different elements of their visual and spatial context and different attributes of their shared task.

Status Message Question Asking

People are increasingly turning to their online social networks to ask questions about a variety of topics from factual information to recommendations to favors. The aim of this project is to understand how individuals navigate online social spaces for their information needs, and to develop tools that integrate various information resources into social networks in useful ways. Recent work has investigated how and why information needs are routed differently to search engines and social networks, and the outcomes of these routing choices. Current work is testing the success of various audience targeting strategies for information seeking in online social networks, in terms of both informational and social benefits.

RichTOC: Exploring the Richness of Text-Only CMC

This project explores the richness of text-based computer-mediated media such as email and instant messaging. Through the application of quantitative and qualitative techniques such as corpus analysis and chronemic analysis, and experimental methods, we reveal the richness of messaging that takes place when communicators use text-only CMC. Our work shows how simple text can convey information about the personality of the communicator, and about relationships, such as trust, that develop between communicators. We are also studying the many mechanisms communicators employ to communicate paralinguistic cues and emphasis through the creative manipulation of text.

Romantic Couple Conflict and Technology-Mediated Communication

This line of work examines the role of various communication technologies during romantic couple conflict. While much is known about romantic couple conflict in face-to-face settings, little is known about how technology might affect the communication processes or relational outcomes of the conflict. Our work has found that couples channel switch, or switch between face-to-face and different types of mediated communication, during one conflict episode. More recent work has revealed a variety of interpersonal motivations for channel switching and found both pros and cons for using mediated communication (e.g., text messaging, IM, email) during a conflict.

Culturally-Aware Applications

The goal of this project is to mine cultural diversity information from user-generated content and leverage this information in novel application contexts. In recent work, we have shown that world knowledge is encoded very differently across 25 language editions of Wikipedia. We are now building our first applications, like Omnipedia, which use the diversity found in Wikipedia's knowledge representation to facilitate greater cultural awareness for the user and the system. Key to this effort is WikAPIdia, our hyperlingual and spatiotemporally-enabled Wikipedia API. WikAPIdia is available as an open-source project, and we invite other researchers to join us in developing innovative "culturally-aware applications".

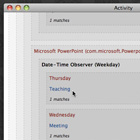

Group Dynamics on Wikipedia

New media forms offer a rich source of data for investigating social processes and group dynamics in computed-mediated media. The project is developing analytic tools to analyze information processing and consensus formation across groups on Wikipedia. Using methods from social network analysis and content analysis, this research will examine how social influence processes and norms emerge and become stabilized in collaborative environments. Results will help inform the development of decision-making models in complex information and social environments and how technologies can be designed to effectively augment these processes.

Research Application Tools

To facilitate naturalistic eye-tracking research, we have developed a tool that allows us to synchronously code parallel streams of gaze data and automatically recognize when people are looking at objects in 3D space. We are also developing tools to explore the use of flexible, real-time gaze input.

Socially-Aware Computing for Collaborative Applications

Researchers: Chris Karr

A major hurdle in understanding of the role of social context in computing lies in our inability to access real world, everyday data, in a systematic enough way to develop models of various social contexts. To address this, we have developed a research platform that permits the automated collection of social context using sensors and machine learning techniques. We are currently investigating how to implement this strategy on desktop and mobile platforms in order to enable the construction of the next generation of intelligent and socially-aware software and services.

Talk About Things: Understanding World-Situated Language

Using naturalistic experimental contexts, we explore how attributes of shared visual information influence patterns of language use (e.g. spatial reference) and non-verbal communication (e.g. gaze). We develop computational models to describe the ways in which individuals integrate shared visual information with linguistic information when generating partner-specific speech, and study how individuals adapt their language processes depending on visual and spatial contexts and the nature of a shared task.